Detection MethodsThe "Detection Methods" field within many CWE entries conveys information about what types of assessment activities that weakness can be found by. Increasing numbers of CWE entries will have this field filled in over time. The recent Institute of Defense Analysis (IDA) State of the Art Research report conducted for DoD provides additional information for use across CWE in this area. Labels for the Detection Methods being used within CWE are:

With this type of information (shown in the table below), we can see which of the specific CWEs that can lead to a specific type of technical impact are detectable by dynamic analysis, static analysis, and fuzzing evidence and which ones are not. This table is incomplete, because many CWE entries do not have a detection method listed.

Understanding the relationship between various assessment/detection methods and the artifacts available over the life-cycle will enable you and your decision-makers to plan:

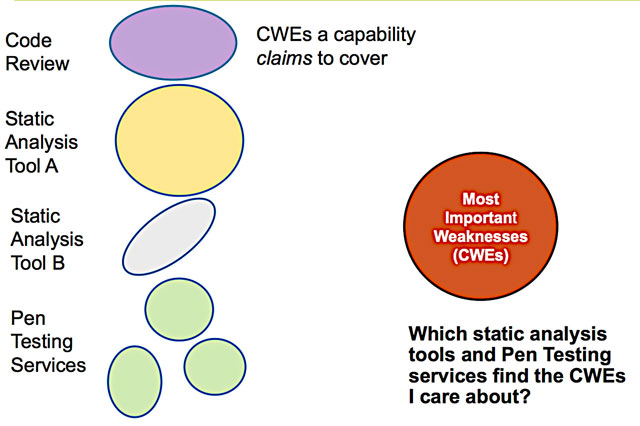

Matching Claims to Needs

As shown above, matching coverage claims (with the weaknesses your organization has prioritized) can assist you in planning assurance activities. This will better enable you to combine the groupings of weaknesses that lead to specific technical impacts with the specific detection methods needed for gaining insight into whether the dangerous issues have been addressed. In the future, the same type of information in the technical impact vs. detection method table can be used to produce an assurance tag that could be attached to an executable code bundle, leveraging ISO/IEC 19770-2:2009 as implemented for Software Identification (SWID) Tags. This will allow communication with others, so that they may gain insight into the assurance efforts made on a piece of software created by someone else. The basic idea would be that a SWID Tag would contain assurance information to convey the types of assurance activities and efforts undertaken against what types of failure modes. The receiving enterprise could then review this tag and match that information against their own ideas about how they will use the software and what failure modes they are most concerned about, and attest to whether the appropriate level of attention was paid to the technical impacts they are trying to avoid. This same table of information can also support an ISO/IEC 15026 assurance cases. |